POML: The Rise of Structured Prompt Engineering and the Prospect of AI Application Architecture's 'New Trinity'

Introduction

In today's rapidly advancing artificial intelligence (AI) landscape, prompt engineering is transforming from an intuition-based "art" into a systematic "engineering" practice. POML (Prompt Orchestration Markup Language), launched by Microsoft in 2025 as a structured markup language, injects new momentum into this transformation. POML not only addresses the chaos and inefficiency of traditional prompt engineering but also heralds the potential for AI application architecture to embrace a paradigm similar to web development's "HTML/CSS/JS trinity." Based on an in-depth research report, this article provides a detailed analysis of POML's core technology, analogies to web architecture, practical application scenarios, and future potential, offering actionable insights for developers and enterprises.

POML Ushers in a New Era of Prompt Engineering

POML, launched by Microsoft Research, draws inspiration from HTML and XML, aiming to decompose complex prompts into clear components through modular, semantic tags (such as <role>, <task>), solving the pain points of traditional "prompt spaghetti." It reshapes prompt engineering through the following features:

- Semantic tags: Improve prompt readability, maintainability, and reusability.

- Multimodal support: Seamlessly integrate text, tables, images, and other data.

- Style system: Inspired by CSS, separate content from presentation, simplifying A/B testing.

- Dynamic templates: Support variables, loops, and conditions for automation and personalization.

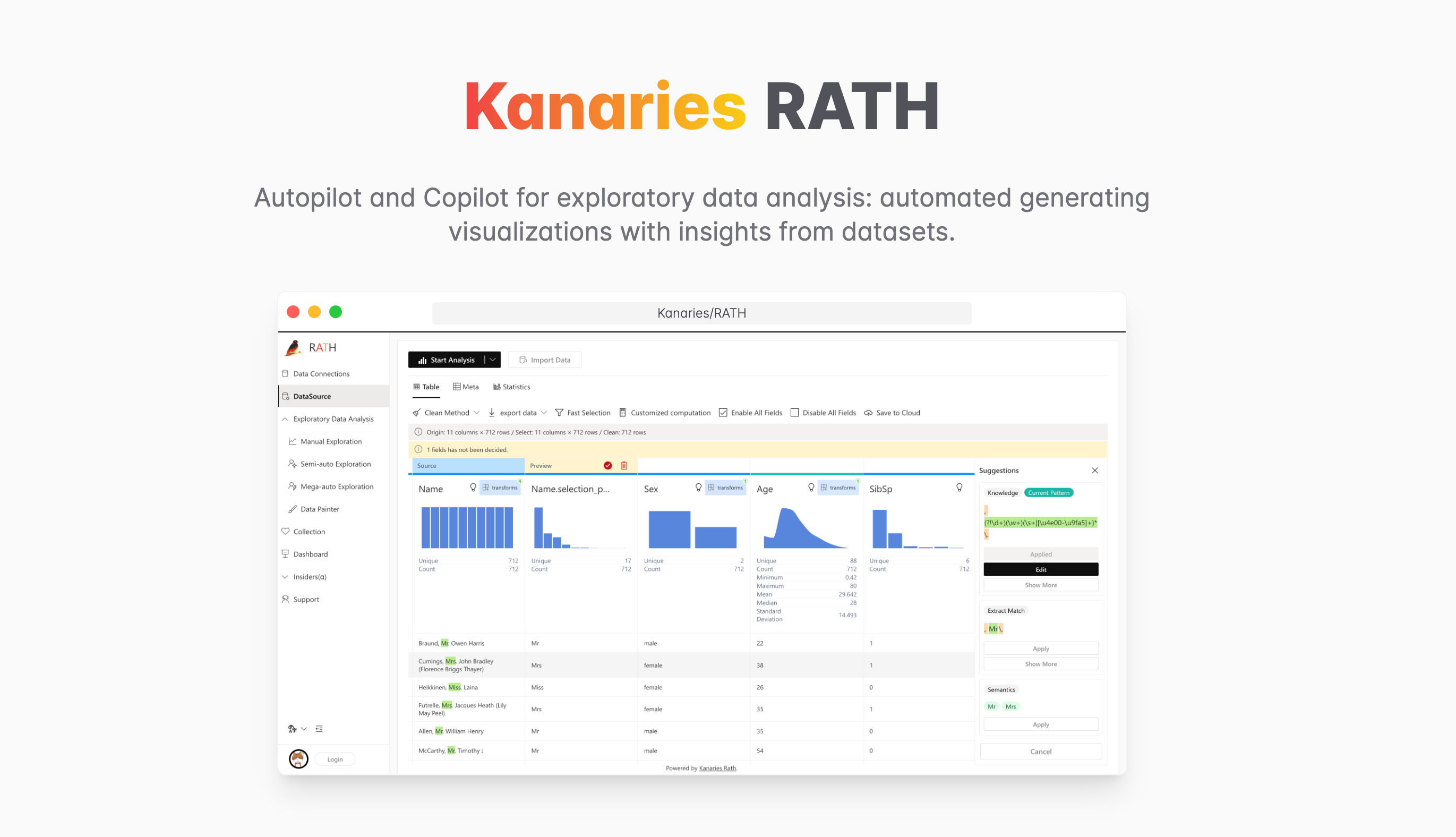

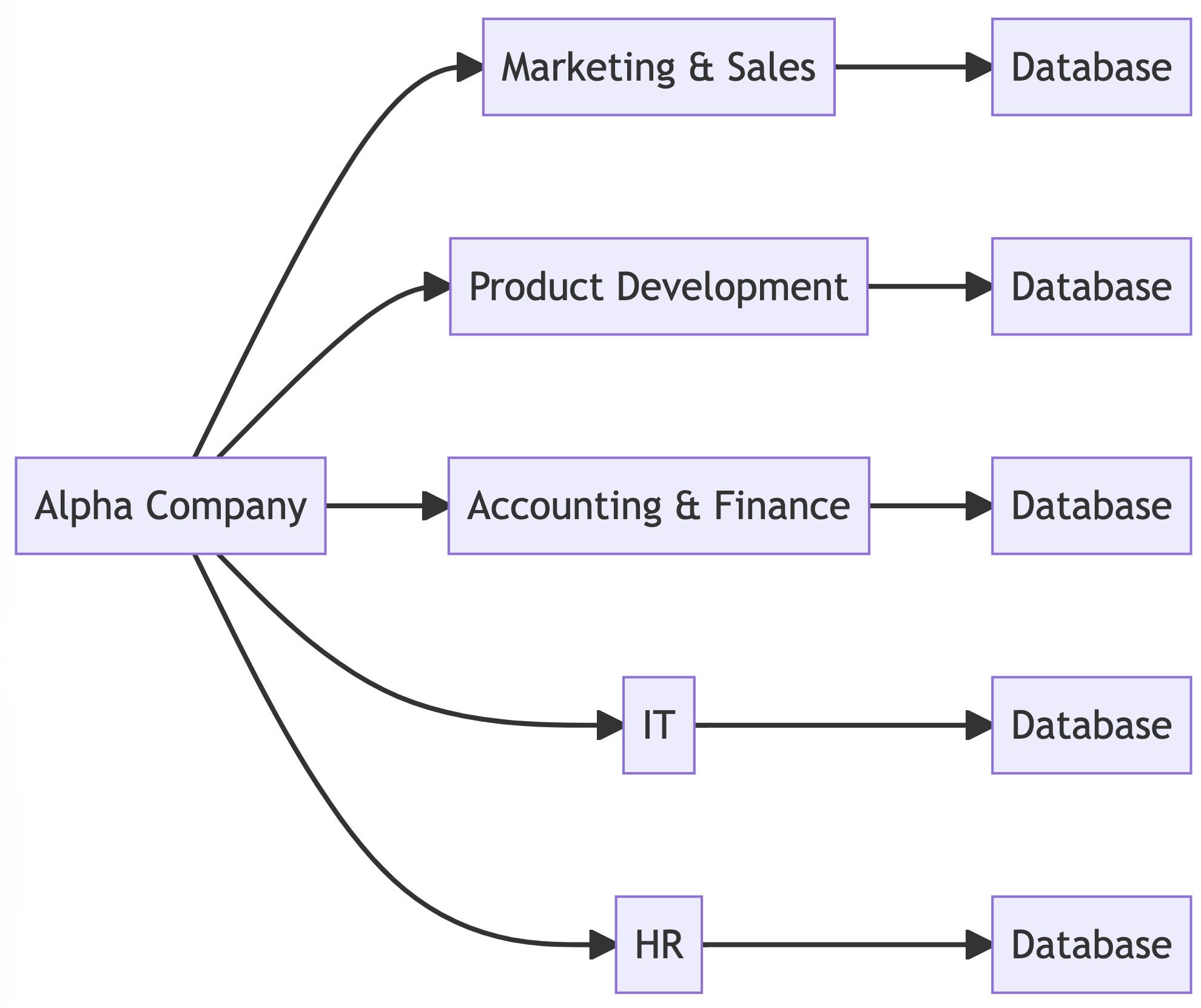

POML is not just a language but the structural layer of AI application architecture, forming the "new trinity" together with optimization tools (like PromptPerfect) and orchestration frameworks (like LangChain). This architecture highly aligns with the academically proposed "Prompt-Layered Architecture" (PLA) theory, elevating prompt management to "first-class citizen" status equivalent to traditional software development.

In the future, POML is expected to become the "communication protocol" and "configuration language" for multi-agent systems, laying the foundation for building scalable and auditable AI applications. While the community debates its complexity, its potential cannot be ignored. This article will provide practical advice to help enterprises embrace this transformation.